Confidence Levels 101: How to Gauge LLM Understanding And Avoid Hallucinations

AI can’t just sound confident – it needs to be right. This post explains how confidence scoring helps support teams catch low-certainty answers before they cause harm.

.png)

Imagine this happening inside your contact center: A cancer patient calls to confirm their benefits.

The AI assistant responds instantly:

“Your claim has been denied. You’re not eligible.”

The patient is stunned. The AI sounded confident – professional, even empathetic.

But here’s what you didn’t see: It was only 52% sure of that answer.

No flag. No escalation.

Just an AI hallucination, delivered straight to the person who needed help most.

This isn't science fiction. This is what happens when systems are designed to generate answers but not to question their own certainty.

Gartner, Magic Quadrant for CCaaS, 2025: “Generative AI errors in regulated workflows can lead to compliance failures and customer harm, especially in healthcare.”

When AI hallucinates, you pay the price

Most vendors frame hallucinations as a harmless glitch. But in regulated industries, they're a liability.

A hallucinated output isn't just a wrong answer. It can lead to:

- Denial of coverage when the patient is eligible

- Approving a suspicious claim that should trigger escalation

- Misrouting a fraud dispute to the wrong team

- Auto-canceling a legitimate subscription

In each case, the cost is real: regulatory exposure, damaged brand trust, customer churn, and internal fire drills.

Gartner, Magic Quadrant for CCaaS, 2025: “Hallucinated responses in CX AI deployments are shifting from fringe cases to operational liabilities.”

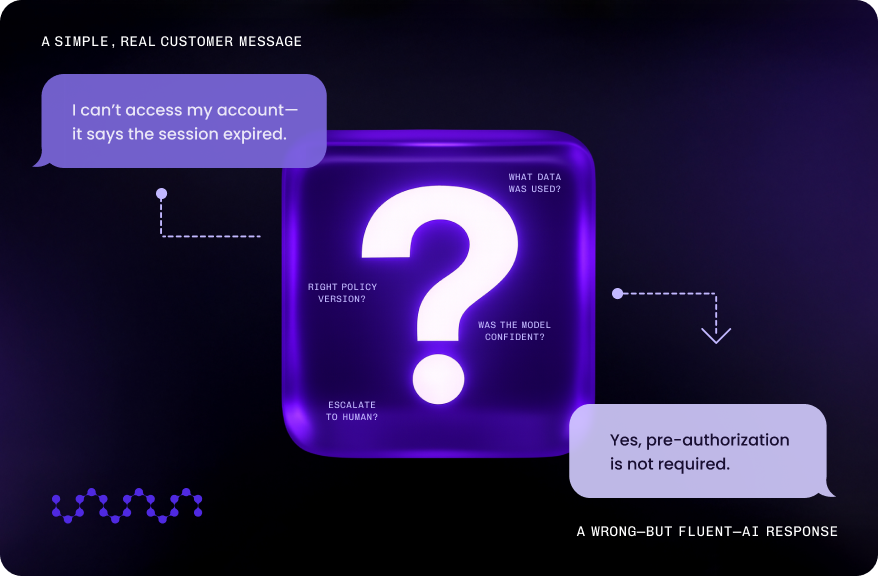

The Black Box problem

The root problem remains: most AI platforms don't show you how confident they are in the answer.

Traditional AI tools are what we call black boxes. You give them a prompt, they give you a response, but you can’t see how or why they made that decision.

You have no transparency into:

- The data the model used

- How confident it was in the output

- What should happen if that confidence is low

This creates a dangerous dynamic in your CX operations: the AI sounds confident, but it may be wrong. You assume it's right, but you have no way to tell.

Gartner, Magic Quadrant for CCaaS, 2025: “The need for decision-level control in AI workflows is especially critical in regulated industries like healthcare and finance.”

Real-World Black Box Examples

- Healthcare: A generative assistant tells a patient they don’t need pre-authorization for a specialist visit. The source was outdated. The model didn’t know. The patient ends up with a $1,200 surprise bill.

- Insurance: An AI agent auto-approves a low-value claim. The model was trained on limited data and failed to detect fraud signals that a human would have caught.

- FinServ: An LLM generates a payment explanation that mixes two customer accounts. The error isn’t caught until the customer complains to the regulator.

Forrester Wave: Conversation Intelligence, Q2 2025: “While many contact centers experiment with AI, few have implemented robust fallback orchestration or risk thresholds.”

Confidence Scoring: The missing link in AI governance

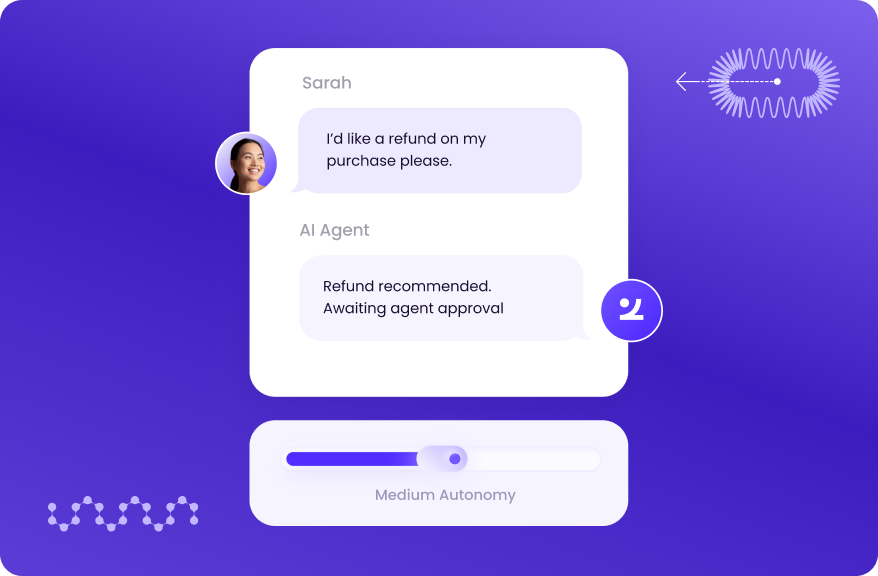

You shouldn't let your AI guess, unless you've told it when that's okay. You need a Confidence Score built into the core of your platform. Rather than blindly trusting the model's output, you interrogate it.

A Confidence Score calculates a real-time score that estimates how likely the AI is to be correct. More importantly, you define what happens if the model isn’t confident enough.

Think of it like a pilot on an autopilot system. The plane is flying itself, handling routine navigation (automation). But the pilot, your operations team, is watching the stress gauges.

If the system's confidence gauge drops, say, due to severe turbulence or a sudden wind shear, the autopilot doesn't just guess or try a risky maneuver. It immediately signals the pilot to take the controls and follow a structured fallback procedure.

You decide the threshold for that turbulence, and you decide whether the system attempts a simple correction or hands control to a human agent right away. This ensures automation only runs when the signals say it’s safe.

How Confidence Score works: AI with guardrails

.png)

Confidence Score isn't just a diagnostic tool. It's a decision gate. You analyze multiple factors before delivering an answer.

These signals are combined into a single Confidence Score. Then, based on your thresholds, you route the case accordingly.

Confidence Score routing table

This is real-time routing logic that ensures automation only runs when it’s safe to do so.

The bigger picture: Control in the age of AI

Confidence scoring isn't just about better answers; it’s about operational control. When AI is deeply embedded in your CX stack, your team needs a governor. You need a way to decide when automation runs, and when it shouldn’t.

Here’s what that unlocks:

Compliance

You avoid hallucinated advice in regulated workflows by ensuring low-confidence outputs never

Avoid hallucinated advice in regulated workflows by ensuring low-confidence outputs never reach customers unchecked.

In healthcare, finance, and insurance, Gartner notes that AI governance frameworks with decision-level thresholds will be a regulatory requirement, not a best practice, by 2027.

Efficiency

You don’t escalate every gray-area case. You escalate only when the AI isn’t confident. This means fewer false positives, less noise for your agents, and higher trust in self-service.

Forrester Wave: Customer Service Solutions, Q1 2024: “Confidence-based AI workflows are critical to scaling automation without increasing agent burden.”

Trust

Operations leaders and compliance teams get transparency and control over automation outcomes. Agents get clarity on what AI can handle and where they need to step in.

Forrester Wave: Conversation Intelligence, Q2 2025: “Vendors embedding explainability and fallback logic are better positioned to support AI in complex CX workflows.”

Scalability

By gating automation with confidence scores, you can safely scale AI across new use cases, channels, and regions without increasing risk.

AI outcomes you can trust

You get control, not guesswork. AI runs the playbook, but only when the signals say it's safe. Business logic defines the rules. Confidence scores trigger the right path. Every decision is logged, explainable, and auditable.

- For the Regulatory-Driven Optimizer (compliance, legal, governance leads): Confidence scoring gives you risk mitigation, traceability, and defensible audit trails across every decision point.

- For CX Operations (VP of Support, CX Strategy): It means fewer escalations, less noise, and AI you can actually trust to drive outcomes without babysitting it.

- For IT & Systems Leaders: It means automation you can deploy with confidence, knowing it won’t operate outside defined boundaries or expose the business to avoidable risk.

This is the future of enterprise automation: structured, safe, and confidence-aware. Most tools optimize for output. You need a tool that optimizes for outcomes you can trust.

Want to see Confidence Score in action? Book a custom demo and we'll show you how it works with your environment: real data, real logic, real results.

Frequently Asked Questions

What are LLM confidence levels?

LLM confidence levels indicate how certain a language model is about the accuracy of its generated response, typically expressed as a probability score between 0 and 1.

What causes AI hallucinations in large language models?

AI hallucinations occur when LLMs generate plausible-sounding but factually incorrect or fabricated information, often due to gaps in training data, ambiguous prompts, or the model's tendency to prioritize coherence over accuracy.

How can confidence scores help reduce hallucinations?

Confidence scores allow systems to flag low-certainty responses for human review, route uncertain queries to alternative workflows, or trigger additional verification steps before presenting information to users.

Why do LLMs sometimes sound confident when they're wrong?

LLMs are trained to generate fluent, coherent text, which can make incorrect responses appear authoritative even when the underlying confidence is low or the information is fabricated.

How should enterprises handle low-confidence AI responses in customer service?

Enterprises should implement guardrails that escalate low-confidence responses to human agents, use structured decision trees to guide AI outputs, or require verification before presenting answers in regulated or high-stakes scenarios.

What role does workflow automation play in managing AI confidence?

Workflow automation can route AI interactions based on confidence thresholds, ensuring complex or uncertain cases are handled with appropriate oversight while allowing high-confidence responses to proceed autonomously.

.webp)

.svg)

.svg)

.svg)